Membership Inference Attacks Can't Prove that a Model Was Trained On Your Data

In our new position paper, to appear at SaTML 2025, we argue that membership inference attacks are fundamentally unsound for proving to a third party, like a judge, that a production model (e.g., GPT-4) was trained on your data.

Training large generative models like GPT-4 or DALL-E raises questions about the use of copyrighted material. Recent lawsuits (e.g. Getty Images v. Stability AI) have claimed that models were trained using data without the data owner’s permission. Such a claim requires providing evidence that a machine learning (ML) model has used specific data in its training.

A common proposal in the research literature is to use membership inference (MI) attacks for this purpose. In simple terms, these attacks try to determine whether or not a specific piece of data was part of a model’s training set. This sounds promising for proving that your data was misused! But there’s a big problem: how do you convince the third party that your attack actually works?

What is a training data proof?

The goal of a training data proof is to present convincing evidence that a model was trained on specific data. The ideal approach would simply be to examine the training data directly, but this is rarely possible: closed-source models have unknown training datasets and lawsuits so far do not seem to have led to any compelled access.

Instead, the proof thus has to demonstrate that there is some model behavior that clearly indicates that the data in question was used during training.

Of course, the accused party could then always claim that this behavior is explained by other factors. And so the essence of a training data proof is to provide convincing evidence that this behavior is extremely unlikely if the data had not been seen during training.

Formalizing Training Data Proofs as Hypothesis Testing

We can formalize this notion by drawing on the language of hypothesis testing. Consider the following null hypothesis:

The goal of a training data proof is then to reject this null hypothesis. That is, we need to demonstrate that the observed model behavior (whatever this behavior may be) is extremely unlikely if the model had not been trained on the data. In other words, we must prove that our hypothesis test has a low false positive rate, i.e., the probability of wrongfully accusing a model developer of using some target data must be very low.

More formally, given a model $f$, data $x$, and some test statistic $T(f, x)$ (e.g., the model’s loss on $x$) we define a critical region (or rejection region) $S$ such that we reject the null hypothesis $H_0$ if $T(f,x) \in S$. The false positive rate is then given by: $$ \begin{aligned} \text{FPR} = \Pr_{f \sim \texttt{Train}(D)}[T(f, x) \in S \mid H_0], \quad \quad \quad \quad \quad (1) \end{aligned} $$

where the probability is taken over the randomness of training f over some dataset $D$ and (potentially) of computing the test statistic $T$.

Existing MI attacks aim to minimize this quantity. And indeed, recently proposed attacks such as LiRA are shown to infer membership at high true positive rates while incurring very few false-positives (e.g., on the order of 0.01%).

But how do we actually compute the FPR in (1)? Since the probability is over both training and running inference on $f$, there is no tractable way of computing this probability exactly. And so existing academic research estimates this rate by “sampling from the null hypothesis”. That is, we train multiple models on datasets that do not contain a target sample (i.e., where $H_0$ holds), and we measure how often the attack wrongly concludes that the sample is a member. But critically, this requires running the attack in a controlled setting, where we fully control the data generation process and model training.

What our position paper argues, is that all existing attempts at building a training data proof using membership inference end up cutting some corners to try and sample from the null hypothesis. As they do not end up sampling from the true null hypothesis (but some distortion of it), they cannot provide convincing evidence that the attack’s FPR is low.

But before we go over the issues in those works, let’s consider an alternative hypothesis test. As we’ll see, for this one we can meaningfully bound the FPR (under some strong assumptions)!

An Alternative Formalization: The Counterfactual Rank

The issue with the hypothesis test above is that we want to show that the model has abnormal behavior on a single, specific sample $x$. And so the only way to estimate this distribution is by training many models.

But what if instead, we could show that the model’s behavior on $x$ is very unlikely when compared to the behavior on other samples? (this is the idea behind the exposure metric proposed in earlier work for quantifying data memorization in LLMs). The idea here is to show that the model’s behavior on some target sample $x$ is abnormal when compared to other counterfactual data samples.

That is, we’ll assume that the target data $x$ is originally selected from some set $\mathcal{X}$ before being published (for example, a news provider might add a uniformly random 9-digit identifier to each news article they post online.)

Our test statistic $T(f, x)$ is now as follows: we rank all samples in $\mathcal{X}$ according to the model $f$, and output the relative rank of $x$ (where we view a low rank as most indicative of being used during training). So we might, for example, rank the samples in $\mathcal{X}$ based on the model’s loss on them (with the sample with lowest loss having rank #1).

We then reject the null hypothesis if for some constant $k \geq 1$: $$ \texttt{rank}(f, x, \mathcal{X}) \leq k . $$

That is, if the target $x$ is not among the $k$ highest-ranked alternatives (note that we define rank 0 as the highest rank).

The FPR from this test can be expressed as follows: $$ \text{FPR} = \Pr_{\substack{x \sim \mathcal{X} \newline f \sim \texttt{Train}(D)}}[\texttt{rank}(f, x, \mathcal{X}) \leq k \mid H_0]. \quad \quad \quad \quad \quad (2) $$

Note that we are still taking probabilities over the randomness of the model training, and thus it does not seem like we have gained anything. However, if we make the additional assumptions:

- (1) $x$ is sampled uniformly at random from $\mathcal{X}$, and

- (2) the model $f$ is trained independently from this sampling (assuming the null hypothesis is true),

then we show (see Lemma 1 in our paper) that the FPR simplifies as:

$$ \text{FPR} \leq k / |\mathcal{X}|. \quad \quad \quad \quad \quad (3) $$

Thus, under suitable (but strong) assumptions, we can upper bound the FPR in a way that is independent of the particular model $f$ that we have access to!

So not all hope might be lost for existing training data proofs! As long as the data that they are applied to is a priori sampled from some set $\mathcal{X}$ uniformly, and independently of the model training, then we can meaningfully bound the FPR.

Unfortunately, we find that all existing works that try to apply MI attacks to training data proofs violate one or both of these assumptions in the way that they create counterfactual data.

Existing training data proofs are unsound

We now survey a number of attempts at building training data proofs from the literature, and show that they are statistically unsound.

Failed attempt #1: A posteriori collection of counterfactuals

A very common approach to circumvent the challenge of evaluating the MI attack’s FPR is to evaluate the attack on non-member data that is known to not be in the model’s training set (e.g., researchers might choose non-members from after the model’s training cut-off date). The FPR is then evaluated on this set, which serves as an approximation to the null hypothesis (i.e., how does the test statistic behave when the target is not in the training set). There are two issues with this approach:

- If we focus on the standard FPR calculation in Equation (1), we are now computing something completely different than what we want, namely a probability over some non-member set $X$, rather than a probability over the model’s training. It is unclear how to reconcile these.

- If we instead use the counterfactual rank approach in Equation (2), the FPR computation now “type-checks” at least, but we are violating the assumptions underlying Equation (3) due to distribution shifts.

The issue of distribution shifts

If we collect a set of non-member data $X$ a posteriori, then the assumption that the target $x$ is a uniform sample from this set $X$ is unlikely to be met. And so the FPR bound in Equation (3) doesn’t hold. The issue here is that even if the model $f$ was not trained on the target $x$, it might still rank x higher compared to other samples in $X$, simply because the target $x$ is distinguishable from the rest of the set.

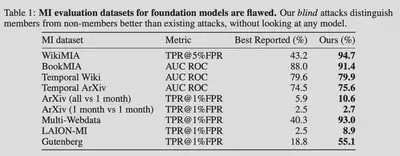

Empirical evidence supports this issue: in a recent work by a subset of the authors, we show that non-members collected a posteriori often differ significantly in distribution from members of the training set. As a result, we can design “blind MI attacks” that distinguish members from non-members without ever accessing the target model (which would be impossible if the members and non-members were properly identically distributed). Notably, we found that at least nine commonly used MI evaluation datasets for foundation models suffer from this issue. The shifts are even so severe that our blind attacks outperform existing MI attacks (which do have access to the target model…):

So why is this an issue? Suppose some party claims that their data $x$ was used to train some model $f$. They go to a judge and show that the model has some abnormal behavior on $x$ compared to the non-members in $X$. The accused party that trained the model could always just argue that any model (even one not trained on $x$) could be shown to exhibit this discriminatory behavior. Indeed, even using no model at all can distinguish between $x$ and $X$.

Failed attempt #2: A posteriori debiasing of counterfactuals

Some researchers attempt to proactively reduce these distribution shifts by filtering the non-member set $X$ in a special way. For example, some works suggest choosing members (the targets) and non-members from just before and after a model’s cut-off date (i.e., the date at which the collection of the model’s pre-training data ended).

However, this approach is also limited: at best, it can ensure low FPR for MI attacks conducted on target data near the cut-off date, but it cannot provide reliable training data proofs for older data or datasets with complex temporal dynamics. Compounding the issue, companies like OpenAI acknowledge that both pre- and post-training datasets may include data collected after the official cut-off date, which further complicates the collection of known non-members.

Failed attempt #3: Dataset inference on counterfactuals

An alternative approach for creating training data proofs is LLM Dataset Inference proposed by Maini et al. A dataset inference attack is essentially an MI attack performed over a larger collection of data (e.g., an entire book) so by itself this does not resolve our issues. To address this, Maini et al. further propose to approximate the FPR by comparing the dataset inference attack on a target data $x$ to attacks conducted on counterfactual samples $X$ (i.e., in a manner similar to the counterfactual rank approach above).

So where do these counterfactuals come from? Maini et al. suggest that an author like the New York Times could argue that a published article $x$ is a random sample from a set of earlier drafts $X$. By comparing an MI attack’s score on the published version of an article (or a collection of articles) to the scores on the unreleased drafts, the author could show that the model’s behavior on the target data is abnormal. As we noted above, we can formally bound the FPR of this approach if the target $x$ and counterfactual set $X$ satisfy some specific assumption. Unfortunately, this is not the case here.

The first issue is that the published sample and the held-out drafts are not really identically distributed, as final versions typically differ in quality and structure.

The second issue is more subtle but also more critical. Simply put, when you publish something, other people will (hopefully) read it and be inspired by it! That is, publishing an article will influence the content of other data in the training set. For example, a news article might get referenced on other platforms, indirectly linking it to the model’s training data. Or publishing a book (say Harry Potter) may result in countless fan pages or wikis being dedicated to the contents of the book. Even if the specific target data was not in the training data, the model is still likely to be trained on other samples that were influenced by it. And so the model would consider the true published data as more likely than any of the held-out counterfactuals (since these never influenced anything else in the training set). So now, all the accusing party can really claim is that the model was trained on some data that was partially influenced by their data (e.g., a model might know the broad story of Harry Potter because it was trained on a wiki dedicated to the novels). This is a much weaker claim, which is unlikely to lead to litigation.

How do we build sound training data proofs?

From the failed attempts we have examined so far, we conclude that it is essentially impossible to correctly compute or approximate the actual FPR when applying MI attacks to arbitrary data. So what can we do instead? We need a setup where we can meaningfully bound the FPR (e.g., using Equation (3)), by constraining the data that we look at. We have three concrete proposals for how to do this.

MI on random canaries

This approach involves sampling and injecting random data into the training set and seeing how the model treats it compared to the non-sampled data. Here we avoid the issues highlighted above by holding out counterfactuals. Firstly, the sampling of a data canary can easily be made from a IID-set. Secondly, if the injected canary carries no useful information (some random-looking text that is unlikely to be read by anyone other than a data crawler), it is unlikely to influence any other content on the Web.

An example is illustrated in the following figure, where we inject a specially crafted canary into a news article, e.g., a hidden message in the HTML code. Here, using the rank test, we can use Equation (3) to directly bound the FPR by $k/|X|$, where $X$ is the set from which the canary was sampled (e.g., all strings of length 13) and $k$ is the rank of the canary.

So if we want to give a training data proof with an FPR bounded by 1%, we have to show some test statistic that ranks the true canary $x$ in the 1%-percentile of all possible canary strings $X$.

Watermarking the Training Data

Watermarking LLMs is a practice where generated text is augmented with an imperceptible signal that can later be used to determine if a text was generated by a specific LLM. It turns out we can repurpose watermarking to construct a convincing training data proof?

In this approach, the data creator/owner contributes various training data but watermarks them, based on a randomly sampled seed, before public release. Researchers have observed that watermarked training data leaves traces easier to detect and much more reliable than standard MI. Testing whether a model has seen this watermarked data during its training can be formulated as a hypothesis test and detected with high confidence (low FPR) even when as little as 5% of training text is watermarked (essentially, we can view watermarked text as a way of embedding a random canary string—the watermark key—into a stream of text).

Data Extraction Attacks

Existing copyright lawsuits against foundation models have usually argued that a model was trained on some piece of data by showing that the model could reproduce the data exactly (at least partially). Intuitively, if you can make a model generate specific content word-for-word, it’s pretty strong evidence that the model was trained on it.

But how do we formally argue that the FPR is low in this case? Maybe the fact that the model generates the right text is just coincidental?

Heuristically speaking, researchers have suggested a few key properties to distinguish training data extraction from coincidental regurgitation:

- Ensure the targeted output string is long enough (e.g., around 50 tokens or 2-3 sentences).

- Ensure the targeted output string has high-entropy to avoid trivial responses (e.g., reproducing a string of successive numbers)

- Use short or random-looking prompts to reduce the chance that the output is encoded in the prompt.

In our paper, we suggest that data extraction can also be cast as a counterfactual ranking problem. The statistic we informally consider here is a text’s ranking under (greedy) decoding from the language model. And the counterfactual set $X$ corresponds to all other possible continuations of the prompt that would be equally plausible under a language model.

To illustrate this point, consider the example of verbatim data extraction below, with the prompt in red and the verbatim continuation in blue. Given the prompt “The sun was setting […] She knew she”, there are many likely continuations that are plausible (say these make up a set $X$). And so the chance that the model picks the correct one (under the null hypothesis) is roughly $1/|X|$. Now, even though we cannot meaningfully compute $|X|$, we can still confidently say that $|X|$ is “absurdly large”, e.g., $|X| > 1,000,000$ . And so the FPR (i.e., the likelihood that a model would generate this exact text without being trained on it) is low enough not to matter.

Conclusion

In conclusion, MI attacks face fundamental limitations when providing reliable training data proofs due to challenges in sampling from the null hypothesis for large foundation models. While existing training data proofs based on MI attacks apply various strategies to address these challenges and estimate the attack’s FPR, we show that none of these approaches are statistically sound. However, three promising directions—injecting random canaries, data extraction attacks, and watermarking training data—offer statistically robust methods with bounded FPR, under mild assumptions, providing stronger, more reliable evidence for AI model auditing.

Thus, unless an accusing party can get compelled access to a training set, we argue that they should be looking to other techniques than MI attacks to provide convincing evidence that their data was used for model training.